Detecting AI-Generated Text: Things to Watch For

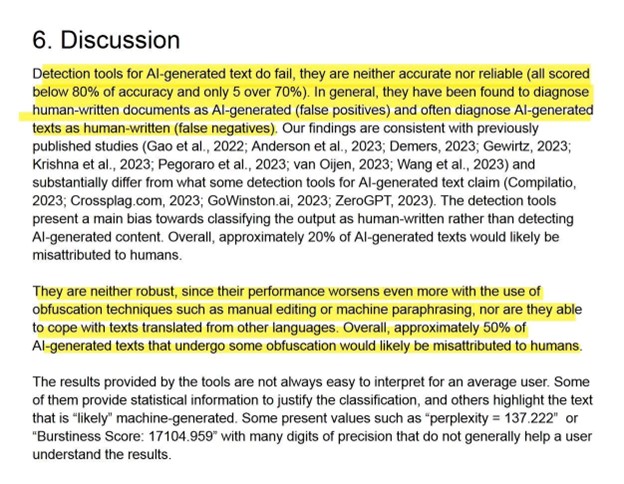

(Updated 2/17/2025) First and foremost, DO NOT rely solely on AI-text detection software to catch student usage. These tools are notoriously unreliable, providing large numbers of false positives and false negatives. The absolute best these detectors have performed is to correctly identify AI-generated-text 80% of the time (below is a screenshot from a study that helped to demonstrate this). That means it’s wrong on one paper out of every five it looks at! AI-detection tools have infamously identified human-written documents like the US Constitution and parts of the Bible as having been generated by AI. They are also discriminatory against students who don’t natively speak English, giving false positives for these students up to 70% of the time (!).

Instead of relying upon faulty detection tools to tell if a piece of content has been generated by an AI, you should rely more upon various factors in the technical aspects of the writing. Here are things to look for and tools to use:

- Compare to Writing They’ve Done in Class: The old methods remain the best, and will be the most resilient to new AI capabilities as they develop. So if you’re not interested in updating your detection methods as you keep constant track of developments in the field, it’s worth utilizing methods like this one, number 2, and number 3. Students often have a unique writing style and voice that they maintain across their assignments. When reviewing a student’s work, look for significant deviations from this established style. Does the vocabulary, sentence structure, or complexity of ideas feel significantly different? Does the tone match the student’s usual approach? If the student normally struggles with grammar and spelling but suddenly hands in a flawless paper, it might be a sign of AI-generated content. Also, AI-generated text may lack the specific mistakes or idiosyncrasies that a student typically makes.

- Quiz the Student on the Content of Their Work: If you suspect that a piece of work has been generated by AI, one effective way to confirm your suspicion is by quizzing the student on the content of their work. Ask them to explain complex points, the reasoning behind their arguments, or the meaning of specific words or phrases they used. If they are unable to provide satisfactory answers or seem confused by their own work, it could be an indication that they didn’t write it themselves. Be aware, though, that students might also struggle with this if they’re nervous or if they completed the assignment a while ago and no longer remember the specifics.

- Use Google Docs’ Version History feature to detect human-like writing behavior: Google Docs has a feature that saves after each small change is made in a document. Having your students compose exclusively in Google Docs and share an editable copy of their drafts is a good way to track whether they’re pasting content wholesale from another source, or if they’re working bit by bit in a more human fashion. Here’s some instructions on how to use the feature.

- Unusual or Over-Complex Sentence Construction: AI might sometimes generate sentences that are grammatically correct but unusual or overly complex, especially when it’s trying to generate text based on a mixture of styles or genres.

- Overuse of bullet points: Some (not all!) AI text-generation tools tend to use a lot of bullet points to communicate in a concise, corporate way.

- Not Referencing Current Events (or lying about/misrepresenting them): Many AI models do not have real-time access to the internet and cannot provide up-to-date information or comment on current events. If an AI-generated text refers to events after its training cutoff, it’s likely extrapolating from patterns it learned and might not be accurate. Keep in mind, though, that many tools now have access to internet search tools and can understand uploaded documents in order to be “brought up to speed” even on events that happened after their initial training.

- Lack of Depth/Analysis: Another way to tell if an essay was written by an AI is if it lacks complex or original analysis. This is because machines are good at collecting data, but they’re not so good at turning it into something meaningful. As an example, try to get ChatGPT to give feedback on a student essay; it’s terrible at it! We’re nearing the point where AI is able to start to analyze writing, but its current responses are still very “robotic.” You’ll notice AI-generated writing is a lot better for static writing (like about history, facts, etc) compared to creative or analytical writing. However, be aware: this is a thing that AI tools are getting increasingly good at, so this detection method is getting less useful. Once Deep Research from OpenAI becomes more widely available, expect this detection method to be entirely irrelevant.

- Inaccurate Data or Quotes: If a prediction machine (which is what these AI text generators are) doesn’t know something but is destined to give an output, it’ll predict numbers based on patterns (which aren’t accurate). So, if you’re reading student homework and you spot several discrepancies between the facts and the numbers, this might have been generated with AI.

- Do the Sources Exist? Do They Say What the Writer Claims They Do?: While this is a thing that students have already done for a long time, many AI text generators will create sources out of thin air that appear convincing. You should check every single source that your students cite if you’re concerned about they’re having used an AI text generation tool. However, be aware: this is a thing that AI tools are getting increasingly good at, so this detection method is getting less useful.

- Look Out for Weird Paraphrases: Many students use tools like Quillbot to take existing academic essays that their friends or classmates have written and “paraphrase” them into not being caught by Turnitin or other plagiarism checkers. This can result in turns of phrases being “translated” literally and in a way that doesn’t make sense, among other odd changes.

Works Cited

Gluska, Justin. “How to Check If Something Was Written with AI.” Gold Penguin, 6 June 2023, https://goldpenguin.org/blog/check-for-ai-content/.

Needle, Flori. “AI Detection: How to Pinpoint AI Generated Text and Imagery [+ Detection Tools].” HubSpot Blog, 23 June 2023, https://blog.hubspot.com/marketing/ai-detection.

“What are some distinctive features about AI-generated text that a professor might be able to use to differentiate from their students’ own writing” prompt. ChatGPT 4.0, 24 May version, 28 June 2023, https://chat.openai.com/share/52c2ecd9-4155-4d16-9952-43548019761c.